Watch our July 27th, 2023 virtual event with Senator Markey (D-Mass.), and our AI experts Kristina Iron, Sarah Myers West, along with our own Daniel Rangel and Lori Wallach expose how Big Tech’s “digital trade” ploy would effectively stymy the Administration’s efforts to effectively regulate AI.

You can view Daniel’s powerpoint here, and scroll down to read the report which inspired this conversation. ⬇️

Introduction

The development of artificial intelligence (AI) technologies, the evolution of the internet, and the growth of the data economy are fundamentally transforming every aspect of our lives. AI technologies can lead to more efficient exchanges and decision making. Yet unchecked and unregulated use of AI has proven to be harmful: It enables biased policing and prosecution, employment discrimination, intrusive worker surveillance, and unfair lending practices. Huge corporations, like Google and Amazon, also use problematic algorithms to self-preference their products and services and crush business competitors, increasing their monopoly power. In the United States and around the world, governments seek to seize the benefits of the digital revolution while also countering tech firms’ ability to abuse workers, consumers, and smaller businesses.

In response, these powerful corporations are fighting back relentlessly. One under-the-radar strategy involves trying to lock in binding international rules that limit, if not altogether ban, key aspects of government oversight or regulation of the digital economy. To accomplish this, the tech industry is seeking to commandeer trade negotiations and establish what it calls “digital trade” agreements that would undermine Congress’ and U.S. agencies’ abilities to rein in their abuses.

A key goal of industry’s “digital trade” agenda is imposing rules that thwart governments from being able to proactively monitor, investigate, review, or screen AI and algorithms by forbidding government access to source code and perhaps, even detailed descriptions of algorithms.

Supporters of such source code and algorithm secrecy guarantees argue this is necessary to prevent untrustworthy governments from demanding tech firms hand over their algorithms, perhaps to be passed on to local companies that will knock off their inventions. That governments engaged in such conduct would be disciplined by new rules seems unlikely. Existing World Trade Organization (WTO) obligations and many nations’ domestic laws already require governments to provide copyright protections and guarantees against disclosure of companies’ confidential business information, including software’s source code and other algorithmic data. Businesses often complain that countries such as China do not comply with the existing rules.

Instead, these digital trade secrecy guarantees would bind scores of democratic countries worldwide that are considering new rules to prescreen or otherwise review the algorithms and source code running artificial intelligence applications in sensitive sectors. That industry’s real goal is foreclosing AI regulation is underscored by the fact that the countries currently involved in U.S.-led trade negotiations do not have policies in place or under consideration that require government access to or transfer of source code or proprietary algorithms, according to a 2023 U.S. government review.

The primary effect of limiting governments’ ability to demand source code and algorithm disclosure, then, would be to place the tech industry above regulatory oversight.

Casting a secrecy veil over source code and algorithms is especially problematic now that policymakers are responding to a growing movement demanding algorithmic transparency and accountability. The goal is for governments not only to have the tools to be able to sanction AI providers after their algorithms have been found to violate the law, but to prevent discriminatory or abusive practices. To do so, many AI experts have recommended policies that enable effective third-party audits of AI systems and/or require governmental pre-market screening conditioned upon access to source code and/or other types of algorithmic information particularly for high-risk sectors, like health services, credit, education, or employment.

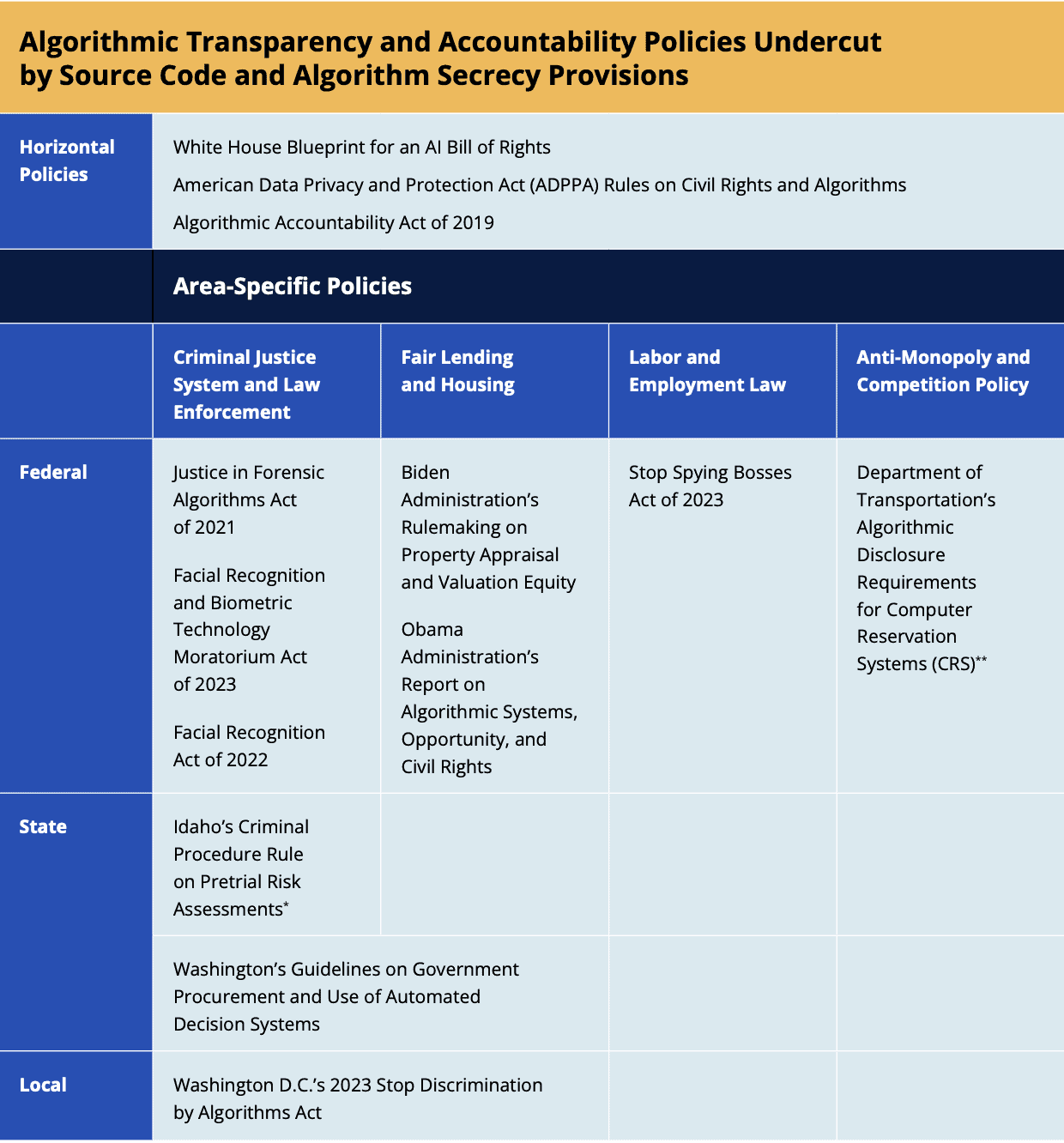

The chart below shows the horizontal policies, meaning those that apply to multiple sectors and domains, and the area-specific policies that would be undercut by including secrecy guarantees for source code and algorithms in trade deals.