Of the many unsettling aspects to Clearview AI, a shadowy facial-recognition-software company providing users access to a database of 3 billion photographs scraped from social media and video streaming sites, the one that has most unsettled me is the pervasive sense that there is nothing we can do about it. A quote from one of Clearview’s investors, David Scalzo, has been rattling around in my head since I first read it in Kashmir Hill’s initial New York Times article: “I’ve come to the conclusion that because information constantly increases, there’s never going to be privacy,” Scalzo told Hill. “Laws have to determine what’s legal, but you can’t ban technology. Sure, that might lead to a dystopian future or something, but you can’t ban it.”

Scalzo is presenting a candid expression of one of the enduring, foundational principles of Silicon Valley: The creep of new technologies is inevitable, and attempts to stop or control it are foolish. Even as the messianic confidence that characterized Silicon Valley philosophizing in the ’90s and ’00s has curdled, the religious faith in technological inevitability has remained. The sense that we are powerless to arrest — or in some cases even direct — the ceaseless expansion of technology into our public and private lives is somehow even stronger now that we’ve seen the negative effects of such expansion. In Silicon Valley, zealous techno-optimism has given way to a resigned but impotent techno-pessimism, shared by both fearful critics who can’t imagine an escape, and predatory cynics like Scalzo, for whom the revelation of an unstoppable, onrushing dystopian future represents, if nothing else, an investment opportunity. “It is inevitable this digital information will be out there,” Scalzo later told “Dilbert” creator Scott Adams in an interview. Sure, we might be headed toward hell — too bad we can’t do anything about it!

Or — imagine me pausing dramatically here — can we? There’s no corresponding fatalism around regulating (or prohibiting) technologies that aren’t computer networks. Most states and cities maintain bans on particular kinds of dangerous weapons, for example. Or take a deadly substance like lead, which used to be discussed in not-unfamiliar terms of indispensability and inevitability: “Useful, if not absolutely necessary, to modern civilization,” the Baltimore Afro-American wrote in 1906, years after it was first clear that lead was fatally toxic. Even decades later, lead was still touted as an inevitable constituent of the future: “One of the classic quotes from a doctor in the 1920s is that children will grow up in a world of lead,” historian Leif Fredrickson told CityLab recently. Not unsurprisingly, it took the U.S. decades longer than the rest of the world to eliminate the use of lead paint indoors. But still, we banned it.

Of course, lead paint is not immediately equivalent to facial-recognition technology for a number of reasons. But what they have in common is that surveillance software of this kind, as it exists and is used right now, causes clear social harms. Clare Garvie of the Georgetown Law Center for Privacy and Technology argues that the expansion of facial-recognition technology not only poses the obvious risk to our right to privacy, but also to our right to due process and even, potentially, our First Amendment rights to free speech and freedom of assembly, given the likelihood that police or corporate use of facial-recognition software at gatherings and protests would have a chilling effect. Worse, the technology is itself still in development, and is frequently fed with flawed data by the law-enforcement agencies using it.

The problem is that, as the last decade has shown us, after-the-fact regulation or punishment is an ineffective method of confronting rapid, complex technological change. Time and time again, we’ve seen that the full negative implications of a given technology — say, the Facebook News Feed — are rarely felt, let alone understood, until the technology is sufficiently powerful and entrenched, at which point the company responsible for it has probably already pivoted into some complex new change.

Which is why we should ban facial-recognition technology. Or, at least, enact a moratorium on the use of facial-recognition software by law enforcement until the issue has been sufficiently studied and debated. For the same reason, we should impose heavy restrictions on the use of face data and facial-recognition tech within private companies as well. After all, it’s much harder to move fast and break things when you’re not allowed to move at all.

This position — that we should not widely deploy a new technology until its effects are understood and its uses deliberated, and potentially never deploy it at all — runs against the current of the last two decades, but it’s gaining some acceptance and momentum. As unsettling as the details of Clearview AI’s business were, the response to their disclosure from legislators and law enforcement has been heartening. Last week, New Jersey’s attorney general, who had, without his permission or knowledge, been used in Clearview’s marketing materials, barred police departments in the state from using the app. On Monday, New York State senator Brad Hoylman introduced a bill that would stop law enforcement use of facial-recognition technology in New York State.

In doing so, New Jersey and New York are joining a handful of other states and municipalities enacting or considering bans on certain kinds and uses of facial-recognition technology. California, New Hampshire, and Oregon all currently ban the use of facial-recognition technology in police body cameras. Last year, Oakland and San Francisco enacted bans on the use of facial recognition by city agencies, including police departments. In Massachusetts, Brookline and Somerville have both voted in bans on government uses of facial recognition technology, and Brookline’s state senator has proposed a similar statewide ban. The European Union was reportedly considering a five-year moratorium on the use of facial recognition, though those plans were apparently scrapped. And Bernie Sanders, alone among Democratic candidates for president, has proposed a complete prohibition on the use of facial-recognition technology by federal law enforcement.

Such bans are admittedly imperfect, in part because they’re pioneering and are necessarily incomplete covering as they do only particular uses, like body cameras, polities, and institutions. One city banning its law enforcement from making use of facial recognition won’t necessarily stop a sheriff’s department from creating a surveillance dragnet — nor would it necessarily stop a private institution within a city from enacting its own facial-recognition program. For now, the bans don’t touch on third-party companies whose data is used by facial-recognition algorithms. (Among the most concerning aspects of Clearview is that the company built its reportedly 3 billion-image database by scraping, more or less legally, public images on social networks and video-streaming sites like Facebook.)

A well-executed ban would require thought and deliberation. (In San Francisco, the initial ban needed to be amended to allow employees to use FaceID on their government-issued iPhones, a feature that didn’t seem to have been adequately considered beforehand.) Robust facial-recognition regulation, Garvie says, would need to identify what, precisely, is meant by “facial recognition” — probabilistic matching of face images? Anonymized sentiment analysis? — and any current uses that would fall under that definition. It would also need to take into account the global nature of the technology industry: “Most of the face-recognition algorithms used by U.S. law enforcement agencies are not made by U.S. companies. So are we prohibiting the sale of that technology within U.S. borders?” Garvie asks. “What about if I go abroad and I’m caught on the face recognition system in Berlin, is that a violation?” It may be that this is what Scalzo really means by “you can’t ban technology” — we as human beings lack the political will and global capability to entirely prevent the use or development of it somewhere in the world.

Still, incomplete and imperfect though they may be, such bans represent the return of an idea largely discarded during Silicon Valley’s conquering expansion: that technological change is evitable, controllable, and even preventable — not merely by powerful CEOs but by democratically elected legislatures and executives. It feels a bit blasphemous to say so, as a citizen of the 21st century and beneficiary of the computer revolution, but: We should ban more technology.

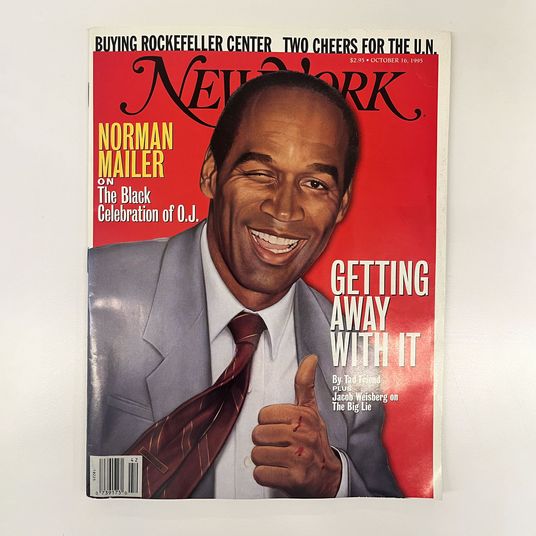

*This article appears in the February 3, 2020, issue of New York Magazine. Subscribe Now!